The trouble with search today is that people put too much trust in search engines – online, resume, social, or otherwise.

I can certainly understand and appreciate why people and companies would want to try and create search engines and solutions that “do the work for you,” but unfortunately the “work” being referenced here is thinking.

I read an article by Clive Thompson in Wired magazine the other day titled, “Why Johnny Can’t Search,” and the author opens up with the common assumption that young people tend to be tech-savvy.

Interestingly, although Generation Z is also known as the “Internet Generation” and is comprised of “digital natives,” they apparently aren’t very good at online search.

The article cites a few studies, including one in which a group of college students were asked to use Google to look up the answers to a handful of questions. The researchers found that the students tended to rely on the top results.

Then the researchers changed the order of the results for some of the students in the experiment. More often than not, they still went with the (falsely) top-ranked pages.

The professor who ran the experiment concluded that “students aren’t assessing information sources on their own merit—they’re putting too much trust in the machine.”

I believe that the vast majority of people put too much trust in the machine – whether it be Google, LinkedIn, Monster, or their ATS.

Trusting top search results certainly isn’t limited to Gen Z – I believe it is a much more widespread issue, which is only exacerbated by “intelligent” search engines and applications using semantic search and NLP that lull searchers into the false sense of security that the search engine “knows” what they’re looking for.

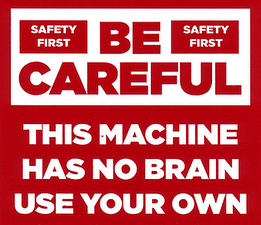

This is Your Search Without a Brain

It’s easy to see why people and companies create search products and services using semantic search and NLP that claim to be able to make searching “easier” – they are looking to sell a product based on the value of making your life easier, at least when it comes to finding stuff.

If you take a look at some of the marketing materials for intelligent search and match search products, you’ll find value propositions such as “Stop wasting time trying to create difficult and complex Boolean search strings,” “Let intelligent search and match applications do the work for you,” and “A single query will give you the results you need – no more re-querying, no more waste of time!”

I love saving time and getting to what I want faster, but my significant issue with “intelligent search and match” applications is that they try to determine what’s relevant to me.

And that’s a rather large issue, because only I know what I am looking for.

It’s critical to be reminded that the definition of ‘relevance,’ specifically with regard to information science and information retrieval, is “how well a retrieved document or set of documents meets the information need of the user.”

The only person that can make the judgment of how well a search result meets their information need is the person conducting the search, because it’s their specific information need.

Any reference to “relevance” by a search engine, whether it be Google, Bing, LinkedIn, Monster, etc., is based purely on the keywords, operators, and/or facets used.

Search engines don’t know what you want – they only know what you typed into or selected from the search interface.

Poor use of keywords, operators or facets will don’t stop you from getting results. All searches “work,” as I am fond of saying – but the quality or relevance will likely be low.

Of course, that assumes that the person conducting the search is actually proficient at judging the quality or the relevance of the results – comparing results to their specific information need and experimenting with different combinations of keywords, operators and facets to look for changes in relevance.

Related Does Not Equal Relevant

I personally never implicitly trust first page or top ranked search results online, nor top ranked results on LinkedIn, Monster, or anywhere I search. Some of the best search results I have ever found were buried deep in result sets – far past where most people would typically review, and essentially in the territory of results the search engine deemed least “relevant.” Pshaw!

One reason for this is because I understand that any search engine I use, no matter how “dumb” (straight keyword matching), or “intelligent” (semantic/NLP), they can only work with the terms I give it. What do you think the most “intelligent” search engine can do with poor user input?

When it comes to searching, unfortunately everyone’s a winner, because every search “works” and returns results. The problem is that few searchers know how to critically examine search results for relevance.

Regardless how how “intelligent” a search engine might be, it can only try to find terms and concepts related to my user input.

This is an often overlooked but critical issue – just because terms might be related, it does not mean they are relevant to my information need.

It certainly helps to understand that some of the most relevant search results can’t actually be retrieved by the obvious keywords, titles or phrases, or even those that a semantic search algorithm deems related to them. In fact, some of the best results simply cannot be directly retrieved – see my post on Dark Matter for more information on the concept.

However, to appreciate the concept that no single search, no matter how enhanced by technology, can find all of the relevant (by human standards and judgment) results available to be retrieved, you have to know a thing or two about information retrieval in the first place.

And if you already lack the ability to critically judge search results and evaluate them for relevance, how can you be expected to be able to evaluate and critically examine the search results returned by intelligent search and match applications?

The “black box” matching algorithms of intelligent search and match applications pose significant issues to users in that searchers have absolutely no insight as to why the search engine returns the results it does. Without this, what option does a user have other than to implicitly trust the search engine’s matching algorithm?

Searching Ain’t Easy

Who says search has to be easy anyway?

Just because you might want it to be, should it be? Does it have to be?

Let’s face it – a lot of people look for the easy way out. The sheer volume of advertisements pushing diet supplements that claim you can lose a ton of weight without having to watch what you eat and exercise is evidence that people want to get the results they want without working for them.

You know the best way to lose weight? A healthy diet combined with regular exercise. The problem is that eating healthy and exercising regularly is that it requires discipline and hard work.

I’m not saying there isn’t a better way to search – I am a fan of Thomas Edison’s belief that “There is always a better way.”

However, I believe that the better way, specifically when it comes to information retrieval, involves discipline and the hard work of people using critical thought in the search process – not short-cutting or completely removing it from the equation.

And I am not alone.

There is already considerable work being done to create new kinds of search systems that depend on continuous human control of the search process. It’s called Human-Computer Information Retrieval (HCIR) – which is the study of information retrieval techniques that bring human intelligence into the search process.

Truly intelligent search systems should not involve limiting or removing human thought, analysis, and influence from the search process – in fact, they should and can involve and encourage user influence.

When you break it down, the information retrieval process has 2 basic parts:

- The user enters a query, which is a formal statement of their information need

- The search engine returns results

The key, in my opinion, is that the search engine should return results in a “Is this what you were looking for?” manner and allow you to intelligently refine your results, as opposed to a “This is what you were looking for” manner.

There’s a BIG difference.

The former begs for user influence and input, the latter does not – it makes the assumption that it found what you wanted

The bottom line is that no matter what you are using to search for information, only you know what you’re looking for and therefore judge the relevance of the search results returned.

Intelligent search isn’t easy, because you actually have to think before and after hitting the search button.

The Intelligent Search Process

As I have written before, searching should not be a once-and-done affair – there is no mythical “once search to find them all.”

Searching is ideally an iterative process that requires intelligent user input.

Here is an example of an intelligent, iterative search process applied to sourcing talent:

- Analyzing, understanding, and interpreting job opening/position requirements

- Taking that understanding and intelligently selecting titles, skills, technologies, companies, responsibilities, terms, etc. to include (or purposefully exclude!) in a query employing appropriate Boolean operators and/or facets and query modifiers

- Critically reviewing the results of the initial search to assess relevance as well as scanning the results for additional and alternate relevant search terms, phrases, and companies

- Based upon the observed relevance of and intel gained from the search results, modifying the search string appropriately and running it again

- Repeat steps 3 and 4 until an acceptably large volume of highly relevant results is achieved

Anyone can enter search terms and hit the “search” button, but not everyone can effectively and intelligently search.

Until you’ve witnessed intelligent and iterative search in action, you likely wouldn’t know the difference between “great” search results, “good” search results and “bad” search results.

It’s as dramatic as the difference between and experienced professional offshore fisher, a recreational fisher, and someone going offshore fishing for the first time.

The ocean holds the same fish for everyone fishing it. While a first-time or recreational fisher can get lucky every once in a while, only a person who really knows what they’re doing can get “lucky” on a consistent basis and catch the fish the recreational fisher only dreams of catching.

Final Thoughts

The ability to enter in some search terms and click the “search” button doesn’t convey any supernatural search ability, but it does certainly make people feel like they are good at searching, because unless you mistype something, everyone’s a winner.

Ultimately, search engines of all types retrieve information, but information requires analysis, and only humans can analyze and interpret for relevance.

Eiji Toyoda, the former President of Toyota Motor Corp., has observed that “Society has reached the point where one can push a button and immediately be deluged with…information. This is all very convenient, of course, but if one is not careful there is a danger of losing the ability to think.”

Critical thinking is perhaps the most important skill a knowledge worker can possess.

The reason why so many people stink at search is because most people simply don’t think before or after they search, and they place too much trust in the machine.

Additionally, the quality of the search terms/info entered directly affects the quality of the results. “Garbage in = garbage out” certainly applies here. And effective searching is rarely a “once and done” affair – the ability to critically evaluate search results for relevance and successively refine the search criteria to increase relevance is the key to true “intelligent search.”

“Black box” matching algorithms can be wonders of technology and engineering, but they pose significant problems in that searchers have absolutely no insight as to why they return the results they do, and in many cases, the engineers creating these semantic/NLP matching algorithms assume they know what their users are looking for better than the users themselves. I’m sorry if I am the only person offended by such an assumption.

Okay, I’m not sorry.

I love technology, and I use and have used some of the best matching technology available, but also I know it’s not a good idea to try to limit or remove intelligent critical thinking from the search process and completely replace it with matching algorithms.

The term human–computer information retrieval was coined by Gary Marchionini whose main thesis is that “HCIR aims to empower people to explore large-scale information bases but demands that people also take responsibility for this control by expending cognitive and physical energy.” (emphasis mine)

For those who simply want information systems to magically provide them with the most relevant results at the click of a button, you should take special note of the fact that experts in the field of HCIR do not believe that people should step out of the information retrieval process and let semantic search/NLP algorithms/AI be solely responsible for the search process.

If you want to get better search results, use the latest technologies, but don’t put too much trust in the machine.

Instead, put some skin in the game, take responsibility for the search process, and expend some cognitive energy critically thinking through not only your search input, but also the results for relevance.

“In the age of information sciences, the most valuable asset is knowledge, which is a creation of human imagination and creativity. We were among the last to comprehend this truth and we will be paying for this oversight for many years to come.” — Mikhail Gorbachev, 1990

Strictly For the Search Geeks

Check out this HCIR Challenge, and at least read the introduction which compares and contrasts precision vs. recall, and references iterative query refinement.